We all know that most of a project’s verification time is spent on debugging, the essence of which is the process of reasoning through a failure to a root cause. But taking a complex ASIC through verification closure to a successful tapeout is much more than just debugging. Engineers are required to understand test scenarios, to identify causes of failing tests and, most importantly, to enhance tests so that no bug is missed.

Specifically, the traditional methods of debugging are reaching a breaking point. We all know the drill: sift through gigabytes of text logs, grep for error messages, try to find the relevant timestamps, open the waveform viewer, and mentally stitch together what happened.

It works, but it’s slow and doesn't scale. It doesn’t scale IP to SoC to System. It doesn’t scale team to team. It doesn’t scale to regressions. And it doesn’t scale to an agentic AI flow.

Instead, with a Verification Analytics approach, we can see at a glance:

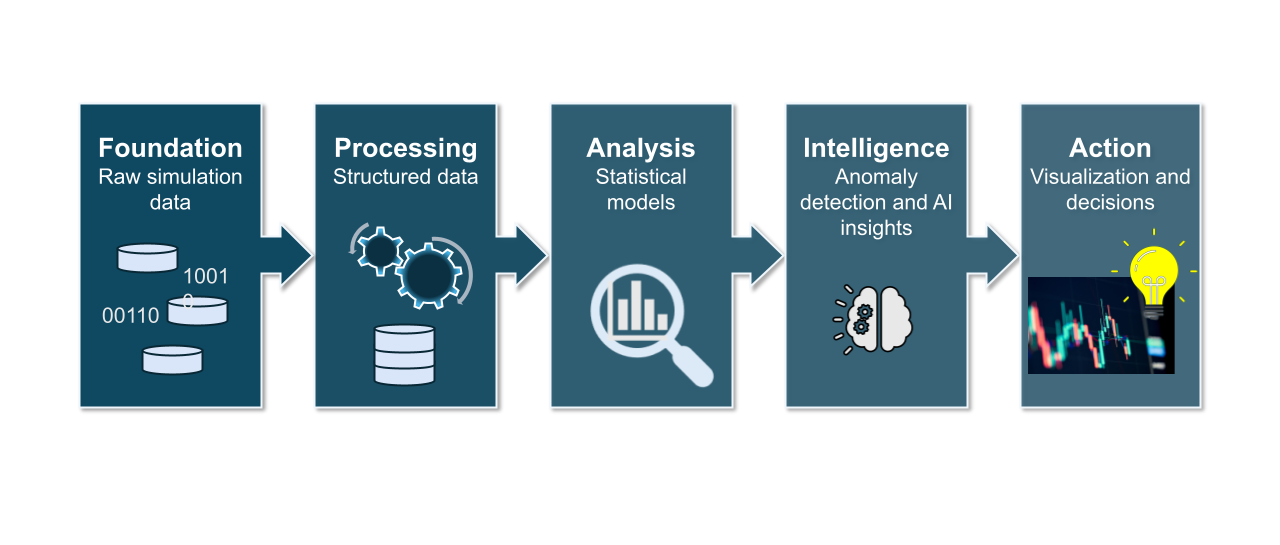

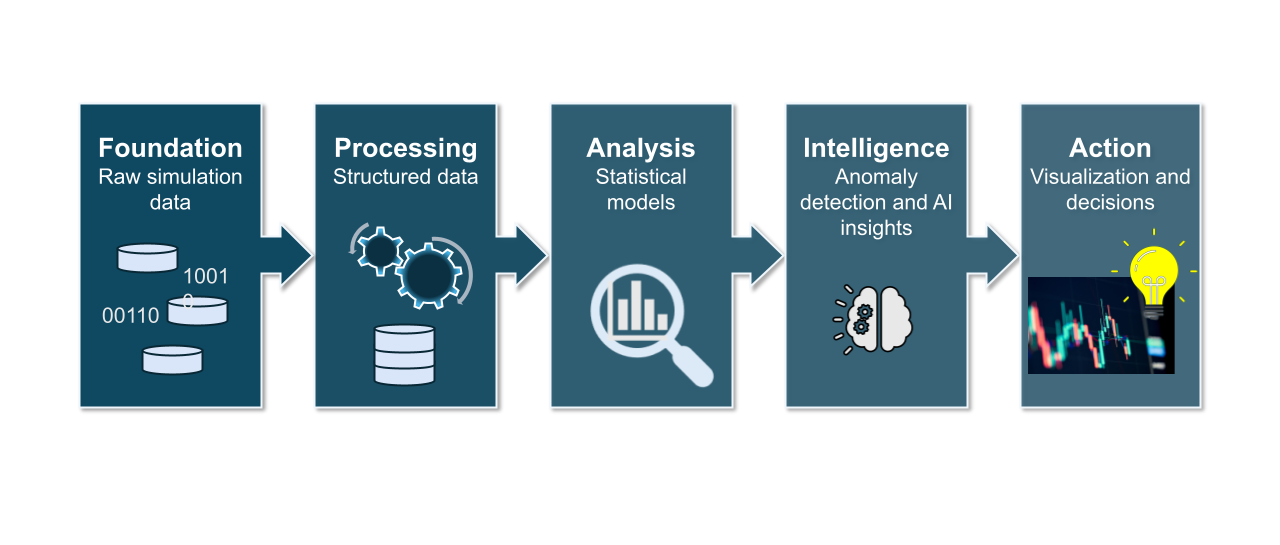

The Verification Analytics flow:

We are excited to introduce the Cogita-PRO paradigm: Verification Analytics, a fundamental shift in how we approach verification outcomes. Our core principle is simple: Verification is a Big Data problem.

Our Core Objectives:

Data Tells the Story

Simulations generate an enormous amount of data. By organizing this output into a structured form, we stop viewing it as "logs" and start viewing it as a comprehensive dataset. This allows for:

Verification Analytics - a different approach

Crafted by verification engineers, Cogita-PRO offers the rigor of big data techniques to uncover insights and root causes faster. This means collecting, cleaning, and organizing our simulation data so we can use mathematical models to find relationships you might miss with the naked eye.

The goal? To make data-driven decisions and let the tool tell the story of the bug.

We all know that most of a project’s verification time is spent on debugging, the essence of which is the process of reasoning through a failure to a root cause. But taking a complex ASIC through verification closure to a successful tapeout is much more than just debugging. Engineers are required to understand test scenarios, to identify causes of failing tests and, most importantly, to enhance tests so that no bug is missed.

Specifically, the traditional methods of debugging are reaching a breaking point. We all know the drill: sift through gigabytes of text logs, grep for error messages, try to find the relevant timestamps, open the waveform viewer, and mentally stitch together what happened.

It works, but it’s slow and doesn't scale. It doesn’t scale IP to SoC to System. It doesn’t scale team to team. It doesn’t scale to regressions. And it doesn’t scale to an agentic AI flow.

Instead, with a Verification Analytics approach, we can see at a glance:

The Verification Analytics flow:

We are excited to introduce the Cogita-PRO paradigm: Verification Analytics, a fundamental shift in how we approach verification outcomes. Our core principle is simple: Verification is a Big Data problem.

Our Core Objectives:

Data Tells the Story

Simulations generate an enormous amount of data. By organizing this output into a structured form, we stop viewing it as "logs" and start viewing it as a comprehensive dataset. This allows for:

Verification Analytics - a different approach

Crafted by verification engineers, Cogita-PRO offers the rigor of big data techniques to uncover insights and root causes faster. This means collecting, cleaning, and organizing our simulation data so we can use mathematical models to find relationships you might miss with the naked eye.

The goal? To make data-driven decisions and let the tool tell the story of the bug.